Modern OCR for the Large Language & Vision Model Era

A reference page for all things Optical Character Recognition (OCR) using Large Language & Vision Models

Disclaimer: This is a work-in-progress research compilation and personal draft. The coverage is not comprehensive, and the analysis reflects one individual’s perspective on recent developments in the field. This resource should not be used as a definitive reference or benchmark for evaluating research contributions. Please refer to the original papers and conduct your own thorough review for academic or professional purposes.

VLM-Based Models

End-to-end vision-language models with learned multimodal representations for general document OCR.

| Date | Paper | Notes | Weights | Code | License |

|---|---|---|---|---|---|

| 2025-11 | Nemotron Parse 1.1 | notes | 885M, 885M-TC | NVIDIA Open Model | |

| 2025-12 | dots.ocr | notes | 3B | rednote-hilab/dots.ocr | MIT |

| 2025-01 | Ocean-OCR | notes | 3B | guoxy25/Ocean-OCR | Apache 2.0 |

| 2025-10 | olmOCR 2 | notes | 7B | allenai/olmocr | Apache 2.0 |

| 2025-10 | DeepSeek-OCR | notes | 3B | deepseek-ai/DeepSeek-OCR | MIT |

| 2025-09 | POINTS-Reader | notes | 4B | Tencent/POINTS-Reader | Apache 2.0 |

| 2025-09 | MinerU2.5 | notes | 1.2B | opendatalab/MinerU | AGPL-3.0 |

| 2025-06 | Infinity-Parser | notes | 7B | infly-ai/INF-MLLM | Apache 2.0 |

| 2025-05 | Dolphin | notes | 322M, 4B | ByteDance/Dolphin | MIT |

| 2025-04 | VISTA-OCR | notes | |||

| 2025-03 | SmolDocling | notes | 256M | docling-project/docling | CDLA-Permissive-2.0 |

| 2025-02 | olmOCR | notes | 7B | allenai/olmocr | Apache 2.0 |

| 2024-09 | GOT-OCR2.0 | notes | 580M | Ucas-HaoranWei/GOT-OCR2.0 | Apache 2.0 |

| 2023-08 | Nougat | notes | small, base | facebookresearch/nougat | MIT (code), CC-BY-NC (wts.) |

Pipeline Models

Modular systems combining specialized detection, recognition, and layout analysis components.

| Date | Paper | Notes | Weights | Code | License |

|---|---|---|---|---|---|

| 2025-10 | PaddleOCR-VL | notes | 0.9B | PaddlePaddle/PaddleOCR | Apache 2.0 |

| 2025-07 | PaddleOCR 3.0 | notes | HuggingFace | PaddlePaddle/PaddleOCR | Apache 2.0 |

| 2025-06 | MonkeyOCR | notes | 3B | Yuliang-Liu/MonkeyOCR | Apache 2.0 |

| 2025-01 | Docling v2 | notes | models | DS4SD/docling | MIT (code), CDLA-Permissive-2.0 (weights) |

| 2024-09 | MinerU | notes | PDF-Extract-Kit | opendatalab/MinerU | Apache 2.0 |

Datasets & Benchmarks

This section catalogs datasets and benchmarks organized by OCR sub-domain. Many benchmarks span multiple domains; they are listed under their primary focus.

Datasets are organized into three tiers based on licensing:

- Commercial: Permissive licenses (Apache-2.0, MIT, CC-BY) that allow commercial use

- Research: Non-commercial licenses (GPL, CC-NC) or explicit research-only restrictions

- Unclear: No license specified or mixed/complex licensing; verify before use

General Document OCR

Research Use Only

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2024-12 | OmniDocBench | 1,355 pages | Text, formula, table, reading-order | Multi-domain benchmark (EN/ZH) | ||

| 2024-05 | Fox Bench | Dense multi-page docs | Full document parsing | EN/ZH documents |

Charts & Visualizations

Chart understanding spans several task families:

- Extraction: Chart-to-table or chart-to-dict conversion (structured data recovery)

- QA: Visual question answering requiring numerical reasoning, comparison, or lookup

- Summarization: Natural language descriptions at varying semantic levels

Most datasets use synthetic charts (programmatically generated from tables) or web-scraped visualizations. Evaluation typically relies on exact/relaxed match for QA, BLEU/ROUGE for summarization, and F1 or RMS error for extraction. Scale varies dramatically: from ~6K charts (ChartY) to 28.9M QA pairs (PlotQA).

Commercial Use

Datasets with permissive licenses suitable for commercial training and deployment.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2024-10 | NovaChart | 47K charts, 856K instr. pairs | 18 chart types, 15 tasks (understanding + generation) | notes | GitHub, HuggingFace | MIT (code), Apache-2.0 (dataset) |

| 2024-04 | TinyChartData | 140K PoT pairs | Chart QA with program-of-thought learning | notes | GitHub, HuggingFace | Apache-2.0 |

| 2019-09 | PlotQA | 224K plots, 28.9M QA pairs | Plot question answering with OOV reasoning | notes | GitHub | MIT (code), CC-BY-4.0 (data) |

Research Use Only

Datasets with copyleft (GPL), non-commercial (CC-NC), or explicit research-only restrictions.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2024-04 | OneChart / ChartY | ~6K charts | Chart-to-dict structural extraction, bilingual (EN/ZH) | notes | Project, GitHub | Apache-2.0 (code); research use only |

| 2023-08 | SciGraphQA | 295K multi-turn, 657K QA pairs | Multi-turn scientific graph question answering | notes | GitHub, HuggingFace | Research only (Palm-2/GPT-4 terms) |

| 2023-08 | VisText | 12,441 charts | Chart captioning with semantic richness (L1-L3) | notes | GitHub | GPL-3.0 |

| 2022-05 | ChartQA | 20,882 charts, 32,719 QA pairs | Chart question answering with visual and logical reasoning | notes | GitHub | GPL-3.0 |

| 2022-03 | Chart-to-Text | 44,096 charts | Chart summarization: natural language text generation | notes | GitHub | GPL-3.0 (+ source restrictions) |

| 2019-09 | CHART-Infographics | ~200K synthetic, 4.2K real | Chart classification, text detection/OCR, role classification, axis/legend analysis | notes | Synthetic, PMC | CC-BY-NC-ND 3.0 (S), CC-BY-NC-SA 3.0 (PMC) |

| 2018-04 | DVQA | 300K bar charts, 3.5M QA pairs | Bar chart question answering | notes | GitHub | CC-BY-NC 4.0 |

License Unclear or Mixed

Datasets with unspecified licenses or complex multi-source licensing. Verify terms before use.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2024-04 | ChartThinker | 595K charts, 8.17M QA pairs | Chart summarization QA | notes | GitHub, HuggingFace | MIT (HF); sources include GPL/CC-NC |

| 2023-07 | DePlot | 516K plot-table, 5.7M QA pairs | Plot-to-table translation, chart question answering | notes | google-research, HuggingFace | Apache-2.0 (model); mixed data licenses |

| 2023-05 | UniChart | 611K charts | Pretraining corpus: table extraction, reasoning, QA, summ. | notes | GitHub | Varies by source (see notes) |

| 2023-04 | ChartSumm | 84K charts | Chart summarization with short and long summaries | notes | GitHub, Drive | Unspecified (no LICENSE file) |

| 2021-01 | ChartOCR / ExcelChart400K | 386,966 charts | Chart-to-table extraction: bar, line, pie | notes | GitHub, HuggingFace | MIT (HF); crawled data, paper silent on license |

| 2018-04 | Beagle | 42K SVG | Visualization type classification | notes | UW | MIT (code only); dataset license not stated |

Mathematical Expression Recognition

Mathematical expression recognition addresses printed, handwritten, and screen-captured formulas with complex 2D spatial structure. The domain is characterized by large symbol inventories (101 classes in CROHME benchmarks, 245 in HME100K, extended vocabularies for LaTeX rendering) and structural relationships such as superscripts, subscripts, fractions, radicals, and matrix layouts.

Task families include:

- Symbol recognition: Isolated classification with reject options for non-symbol junk

- Expression parsing: Combined segmentation, classification, and structural relationship extraction

- Image-to-LaTeX: End-to-end conversion from formula images to markup

- Matrix recognition: Hierarchical evaluation at matrix, row, column, and cell levels

Evaluation typically measures expression-level exact match rates (ExpRate) alongside object-level metrics for symbol segmentation, classification, and spatial relation detection. CROHME benchmarks indicate structure parsing remains a bottleneck: 90% accuracy with perfect symbol labels versus 67% end-to-end. Recent large-scale datasets (UniMER-1M with 1M+ samples) target real-world complexity beyond clean academic benchmarks, including noisy screen captures, font inconsistencies, and long expressions (up to 7,000+ tokens).

Datasets are organized into three tiers based on licensing:

- Commercial: Permissive licenses (Apache-2.0, MIT, CC-BY) that allow commercial use

- Research: Non-commercial licenses (GPL, CC-NC) or explicit research-only restrictions

- Unclear: No license specified or mixed/complex licensing; verify before use

License Unclear or Mixed

Datasets with unspecified licenses or complex multi-source licensing. Verify terms before use.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2024-04 | UniMER-1M | 1,061,791 train; 23,757 test (4 subsets) | Image-to-LaTeX: printed, complex, screen-captured, handwritten | notes | HuggingFace, OpenDataLab | Apache-2.0 (HF tag); upstream sources have mixed licenses |

| 2024-04 | MathWriting | 626k total (230k human, 396k synthetic) | Online handwritten math expression recognition, image-to-LaTeX | notes | Google Storage, HuggingFace | CC-BY-NC-SA 4.0 |

| 2022-03 | HME100K | 74,502 train + 24,607 test images | Handwritten mathematical expression recognition | notes | GitHub, Portal | Unspecified (no LICENSE file) |

Research Use Only

Datasets with copyleft (GPL), non-commercial (CC-NC), or explicit research-only restrictions.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2019-09 | CROHME 2019 + TFD | 1,199 test expressions, 236 pages (TFD) | Handwritten math + typeset formula detection | notes | TC10/11 Package, TFD GitHub | CC-BY-NC-SA 3.0 |

| 2016-09 | CROHME 2016 | 1,147 test expressions (Tasks 1/4), 250 test matrices | 4 tasks: formula, symbol, structure, matrix recognition | notes | TC10/11 Package | CC-BY-NC-SA 3.0 |

| 2014-09 | CROHME 2014 | 986 test expressions (10K symbols), 175 matrices, 10K+9K junk | Symbol recognition with reject, expression, matrix parsing | notes | TC11, TC10/11 Package, GitHub | CC-BY-NC-SA 3.0 |

Handwriting Recognition

Handwriting recognition for natural language text focuses on word-level and line-level detection and recognition in unconstrained conditions. Unlike mathematical expressions, which require parsing 2D spatial structure, general handwriting tasks emphasize sequential text extraction from camera-captured images, historical documents, and field notes. Evaluation uses localization metrics (IoU-based measures) for detection and character/word accuracy rates for recognition.

Datasets are organized into three tiers based on licensing:

- Commercial: Permissive licenses (Apache-2.0, MIT, CC-BY) that allow commercial use

- Research: Non-commercial licenses (GPL, CC-NC) or explicit research-only restrictions

- Unclear: No license specified or mixed/complex licensing; verify before use

Commercial Use

Datasets with permissive licenses suitable for commercial training and deployment.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2021-09 | GNHK | 687 images, 39,026 texts, 172,936 chars | Word-level detection and recognition (camera-captured) | notes | GoodNotes, GitHub | CC-BY 4.0 |

Layout Detection & Document Structure

Document layout analysis identifies and classifies page regions (text blocks, tables, figures, titles, lists, headers, footers) and their spatial relationships. Unlike full OCR pipelines that also perform text recognition, layout detection focuses on structural segmentation: predicting bounding boxes and category labels for document components. Evaluation uses object detection metrics (mAP at various IoU thresholds) with per-category AP breakdowns for fine-grained analysis.

The field distinguishes between category-agnostic detection (locating all content regions regardless of type) and category-aware detection (classifying regions into semantic categories). Modern approaches balance two competing pressures: fine-grained taxonomies (20+ categories for specialized documents) versus coarse taxonomies (5-10 categories for cross-domain generalization).

Models

| Date | Paper | Notes | Weights | Code | License |

|---|---|---|---|---|---|

| 2025-03 | PP-DocLayout | notes | L, _plus-L, M, S | PaddlePaddle/PaddleX | Apache 2.0 |

| 2025-10 | PP-DocLayoutV2 | notes | Model | PaddlePaddle/PaddleOCR | Apache 2.0 |

| 2021-08 | LayoutReader | notes | Model | GitHub | Research only |

Commercial Use

Datasets with permissive licenses suitable for commercial training and deployment.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2022-08 | DocLayNet | 80,863 pages, 11 categories | Layout detection, reading order | GitHub | CDLA-Permissive-1.0 | |

| 2019-09 | PubLayNet | 360K+ pages, 5 categories | Layout detection for scientific documents | GitHub | CDLA-Permissive-1.0 | |

| 2019-05 | DocBank | 500K pages, 13 categories | Weakly supervised layout detection | notes | GitHub | Apache 2.0 |

Research Use Only

Datasets with copyleft (GPL), non-commercial (CC-NC), or explicit research-only restrictions.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2021-08 | ReadingBank | 500K document pages | Reading order detection | notes | GitHub | Research only (no redistribution) |

License Unclear or Mixed

Datasets with unspecified licenses or complex multi-source licensing. Verify terms before use.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|

Table Structure Recognition

Table structure recognition (TSR) extracts the logical structure of tables—identifying cells, rows, columns, spanning relationships, and hierarchical organization. Unlike table detection (which only locates table regions) or table understanding (which also interprets content semantics), TSR focuses on parsing the structural grid: mapping visual layouts to machine-readable formats such as HTML, LaTeX, or specialized tokenization schemes.

The field is characterized by two main architectural families:

- End-to-end vision-language models: Directly predict table structure as token sequences from images (image-to-markup)

- Pipeline systems: Combine separate modules for cell detection, structure parsing, and optional content extraction

Evaluation uses tree edit distance (TED) metrics for structural accuracy and mAP/IoU metrics for cell localization. Modern benchmarks emphasize complex spanning (merged cells across rows/columns), multi-page tables, and domain-specific formats (financial statements, scientific papers, invoices).

Datasets are organized into three tiers based on licensing:

- Commercial: Permissive licenses (Apache-2.0, MIT, CC-BY, CDLA-Permissive) that allow commercial use

- Research: Non-commercial licenses (GPL, CC-NC) or explicit research-only restrictions

- Unclear: No license specified or mixed/complex licensing; verify before use

Commercial Use

Datasets with permissive licenses suitable for commercial training and deployment.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|---|---|---|---|---|---|

| 2021-09 | PubTables-1M | ~1M tables | Structure recognition, detection | HuggingFace | CDLA-Permissive-2.0 | |

| 2019-11 | PubTabNet | 568K tables | Structure recognition | GitHub | CDLA-Permissive-1.0 | |

| 2018-XX | FinTabNet | 113K tables | Structure recognition | IBM Developer, HF (.c) | CDLA-Permissive-1.0 |

Research Use Only

Datasets with copyleft (GPL), non-commercial (CC-NC), or explicit research-only restrictions.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|

License Unclear or Mixed

Datasets with unspecified licenses or complex multi-source licensing. Verify terms before use.

| Date | Paper | Size | Tasks | Notes | Data | License |

|---|

Specialized Methods (No New Data)

Papers that introduce methods or training techniques but do not release new datasets. Included for completeness; see original papers for evaluation details.

Chart Understanding

| Date | Paper | Notes | Weights | Code | License |

|---|---|---|---|---|---|

| 2024-05 | SIMPLOT | notes | GitHub | No license stated | |

| 2024-04 | TinyChart | notes | 3B | X-PLUG/mPLUG-DocOwl | Apache 2.0 |

| 2023-05 | UniChart | notes | base, ChartQA | vis-nlp/UniChart | MIT |

Table Structure Recognition

| Date | Paper | Notes | Weights | Code | License |

|---|---|---|---|---|---|

| 2023-05 | OTSL | notes | Not specified |

Mathematical Expression Recognition

| Date | Paper | Notes | Weights | Code | License |

|---|---|---|---|---|---|

| 2024-04 | UniMERNet | notes | 100M/202M/325M | opendatalab/UniMERNet | Apache 2.0 |

| 2022-03 | SAN | notes | Code not publicly released | — |

Evaluation & Metrics

| Date | Paper | Notes | Code | License |

|---|---|---|---|---|

| 2024-09 | CDM | notes | opendatalab/UniMERNet | Apache 2.0 |

Handwriting Generation

| Date | Paper | Notes | Weights | Code | License |

|---|---|---|---|---|---|

| 2020-08 | Decoupled Style Descriptors | notes | GitHub | Non-commercial research only |

PaddleOCR 3.0: Open-Source OCR and Document AI Toolkit

Paper: PaddleOCR 3.0: Advancements in Open-Source OCR and Document AI

Code: PaddlePaddle/PaddleOCR

Models: PaddlePaddle on HuggingFace

Docs: paddlepaddle.github.io/PaddleOCR

TL;DR

PaddleOCR 3.0 is an open-source OCR and document AI toolkit (Apache 2.0) that ships three main solutions: PP-OCRv5 (lightweight multilingual OCR), PP-StructureV3 (end-to-end document parsing), and PP-ChatOCRv4 (OCR + LLM for KIE/QA). The system includes deployment infrastructure (high-performance inference, Triton/FastAPI serving, on-device, MCP integration). On OmniDocBench OCR, PP-OCRv5 ranks first on average across 17 scenarios. On document parsing, PP-StructureV3 achieves Edit distance 0.145 (EN) and 0.206 (ZH), outperforming MinerU and Docling.

What kind of paper is this?

Primarily $\Psi_{\text{Resource}}$, with substantial $\Psi_{\text{Method}}$ components.

- Dominant: $\Psi_{\text{Resource}}$: The paper delivers a production-ready toolkit with Apache 2.0 licensing, pre-trained model zoo (PP-OCRv5 server/mobile variants, PP-StructureV3, PP-ChatOCRv4), layered API/CLI interfaces, and comprehensive deployment infrastructure (HPI with automatic backend selection, FastAPI/Triton serving, on-device via Paddle-Lite, MCP server). The resource framing is explicit: enabling reproducible OCR and document understanding for the research and practitioner communities. Performance optimizations (73% latency reduction on T4 for mobile recognition) and serving options are presented as reusable tooling rather than operational validation.

- Secondary: $\Psi_{\text{Method}}$: PP-OCRv5 introduces a single multilingual model supporting Simplified Chinese, Traditional Chinese, Pinyin, English, and Japanese. PP-StructureV3 integrates layout analysis, table recognition, and specialized document items (seals, formulas, charts). PP-ChatOCRv4 combines pipeline OCR outputs with vector retrieval, VLM-based answer extraction (PP-DocBee2), and LLM reasoning (ERNIE-4.5) with result fusion.

What is the motivation?

The report frames OCR and document parsing as foundational for downstream document understanding, with LLM and RAG adoption increasing demand for high-quality text extraction, structure recovery, and semantic interpretation across diverse document types.

- Multilingual and layout complexity: Documents span handwriting, multilingual text, complex layouts, tables, formulas, and charts. Prior OCR systems often specialize narrowly or require multiple models for different languages/scripts.

- Production barriers: Deploying OCR at scale requires optimized inference (low latency, resource efficiency), robust serving infrastructure, and on-device support. Existing open-source OCR tools may lack these deployment features or use restrictive licenses.

- Key information extraction and QA: Document workflows increasingly involve extracting structured information and answering questions over document content, motivating integrated OCR + LLM/VLM pipelines.

What is the novelty?

PP-OCRv5 single multilingual model: A unified recognition model handling Simplified Chinese, Traditional Chinese, Pinyin, English, and Japanese, with server (GPU-optimized) and mobile (CPU/resource-constrained) variants. The pipeline includes preprocessing, text detection, text-line orientation classification, and recognition.

PP-StructureV3 end-to-end parsing: Integrates layout analysis, table recognition, and structure extraction into a unified document parsing system. Extends to specialized items including seal text recognition, formula recognition, and chart analysis. Reports Edit distance 0.145 (EN) and 0.206 (ZH) on OmniDocBench parsing, outperforming MinerU (0.333 EN / 0.350 ZH) and Docling (0.538 EN / 0.569 ZH).

PP-ChatOCRv4 retrieval-augmented KIE/QA: Combines PP-StructureV3 OCR outputs with vector retrieval, a 3B VLM (PP-DocBee2) for prompt-based answer extraction from document images, and a 300B LLM (ERNIE-4.5-300B-A47B) for reasoning. Result fusion merges text-based and image-based answers. Reports 85.55% Recall@1 on a custom benchmark (638 documents, 1,196 QA pairs), outperforming GPT-4o (63.47%), PP-ChatOCRv3 (70.08%), and Qwen2.5-VL-72B (80.26%).

Deployment infrastructure: Redesigned inference library (PaddleX 3.0) with layered API/CLI, high-performance inference (HPI) with automatic backend selection (Paddle Inference, OpenVINO, ONNX Runtime, TensorRT), built-in optimizations (multi-threading, FP16), FastAPI/Triton serving, on-device support (Paddle-Lite), and an MCP server exposing OCR/parsing as tools with stdio and Streamable HTTP transports.

What experiments were performed?

PP-OCRv5 on OmniDocBench OCR (17 scenarios, 1-EditDist metric): PP-OCRv5 ranks first on average across all scenarios, compared against multiple VLM baselines with reported parameter sizes.

PP-StructureV3 on OmniDocBench parsing (Edit distance, lower is better): Evaluated on English and Chinese document parsing. PP-StructureV3 achieves Edit 0.145 (EN) and 0.206 (ZH), outperforming MinerU-1.3.11 (0.333 EN / 0.350 ZH) and Docling-2.14.0 (0.538 EN / 0.569 ZH).

PP-ChatOCRv4 on custom KIE/QA benchmark (638 document images, 1,196 QA pairs, Recall@1 metric): The dataset spans financial reports, research papers, contracts, manuals, and regulations. PP-ChatOCRv4 achieves 85.55% Recall@1, compared to GPT-4o (63.47%), PP-ChatOCRv3 (70.08%), and Qwen2.5-VL-72B (80.26%).

HPI latency reduction (NVIDIA Tesla T4): Enabling HPI reduces latency for PP-OCRv5_mobile_rec by 73.1% and PP-OCRv5_mobile_det by 40.4%.

What are the outcomes/limitations?

Outcomes:

- OmniDocBench OCR: PP-OCRv5 ranks first on average across 17 scenarios using the 1-EditDist metric.

- OmniDocBench parsing: PP-StructureV3 achieves Edit 0.145 (EN) and 0.206 (ZH), leading among open-source pipeline systems.

- Custom KIE/QA benchmark: PP-ChatOCRv4 achieves 85.55% Recall@1, a +5.29 point improvement over Qwen2.5-VL-72B (80.26%) and +22.08 points over GPT-4o (63.47%).

- Inference optimization: HPI reduces mobile recognition latency by 73.1% and mobile detection latency by 40.4% on T4 hardware.

- Deployment surface: The toolkit provides FastAPI/Triton serving, on-device deployment via Paddle-Lite, and an MCP server with Local, AI Studio, and Self-Hosted modes supporting stdio and Streamable HTTP transports.

Limitations and open questions:

- Benchmark scope: OmniDocBench OCR and parsing evaluations are publicly documented, but the custom KIE/QA benchmark (638 documents, 1,196 QA pairs) has limited external validation. The authors do not release this benchmark publicly, limiting reproducibility of the PP-ChatOCRv4 results.

- Multilingual coverage: PP-OCRv5 supports Simplified Chinese, Traditional Chinese, Pinyin, English, and Japanese. Generalization to other languages (Arabic, Cyrillic, Indic scripts, etc.) is not addressed. The single multilingual model design may face capacity constraints when scaling to dozens of languages.

- VLM dependency for KIE/QA: PP-ChatOCRv4 relies on PP-DocBee2 (3B VLM) and ERNIE-4.5 (300B LLM). The paper does not ablate the contribution of each component (pipeline OCR vs. VLM-based answer extraction vs. LLM reasoning vs. result fusion). It is unclear whether the Recall@1 gains come primarily from the fusion strategy, the quality of PP-StructureV3 outputs, or the VLM/LLM model choices.

- Deployment overhead: The HPI latency reductions are reported for mobile variants on T4 hardware. Server variants on GPU hardware (A100, H100) are not profiled. Throughput and cost-per-page estimates are not provided, limiting comparison to olmOCR ($176/M pages on L40S) or MinerU2.5 (1.224 pages/s on A100).

- License and model availability: The toolkit is Apache 2.0, but the report does not clarify the licensing or availability of ERNIE-4.5-300B-A47B (used in PP-ChatOCRv4). PP-DocBee2 weights are not linked in the paper. This limits reproducibility of the KIE/QA results.

Model

PP-OCRv5 pipeline and variants

Two variants:

- Server: GPU-optimized for throughput and accuracy.

- Mobile: CPU/resource-constrained for edge deployment.

Pipeline stages:

- Image preprocessing: Handles rotation correction, noise reduction, and normalization.

- Text detection: Identifies text regions with bounding boxes.

- Text-line orientation classification: Corrects text-line rotation (0°, 90°, 180°, 270°).

- Text recognition: Converts detected text regions to Unicode strings.

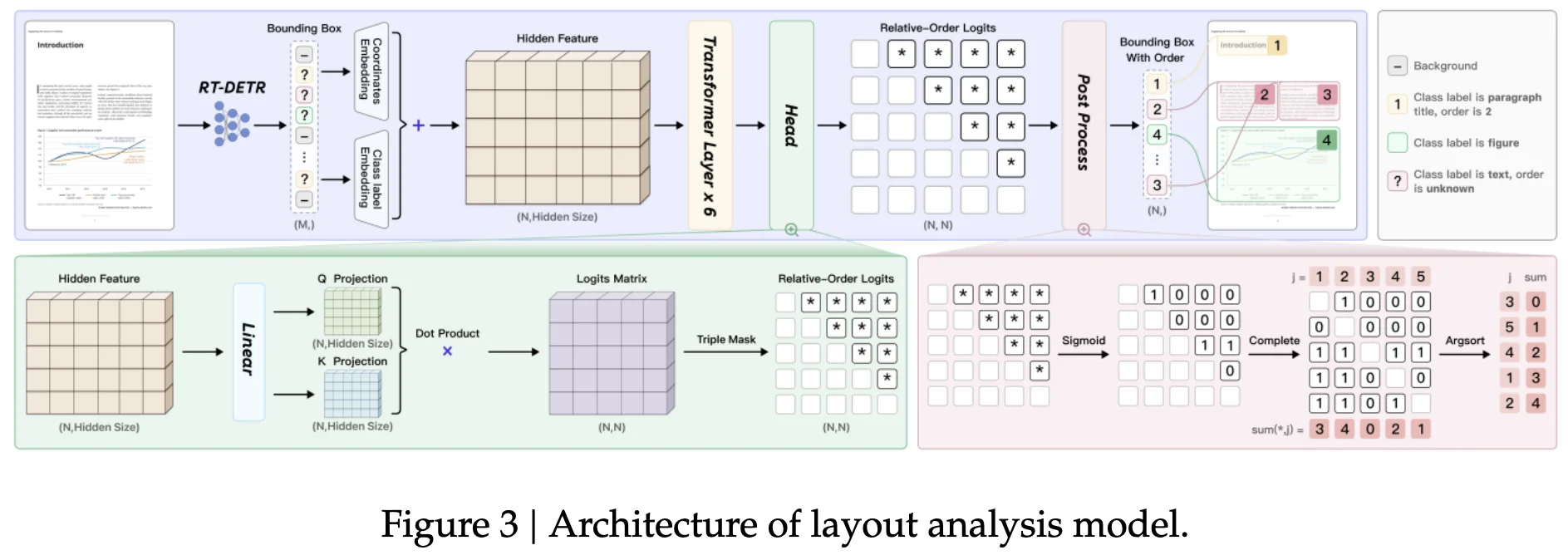

Key architectural ingredients (Figure 3 in paper, selected examples):

- Backbones: PP-HGNetV2 (server), PP-LCNetV3 (mobile).

- Detection enhancements: PFHead, DSR (Dynamic Scale Regression), Lite-Neck.

- Recognition enhancements: GTC-NRTR (Guided Training of CTC with NRTR), multi-scale training.

- Data strategy: Pretrain distillation, synthetic data generation, label refinement using ERNIE 4.5.

PP-StructureV3 modules

Core capabilities:

- Layout analysis: Identifies and classifies document regions (text blocks, tables, figures, formulas, etc.).

- Table recognition: Extracts table structure and cell content, supporting rowspan/colspan via HTML output.

- Structure extraction: Recovers reading order and hierarchical document structure.

Specialized document items:

- Seal text recognition: Handles circular and non-rectangular text (stamps, official seals).

- Formula recognition: Converts mathematical notation to LaTeX.

- Chart analysis: Extracts data from charts and graphs (details not provided in the report).

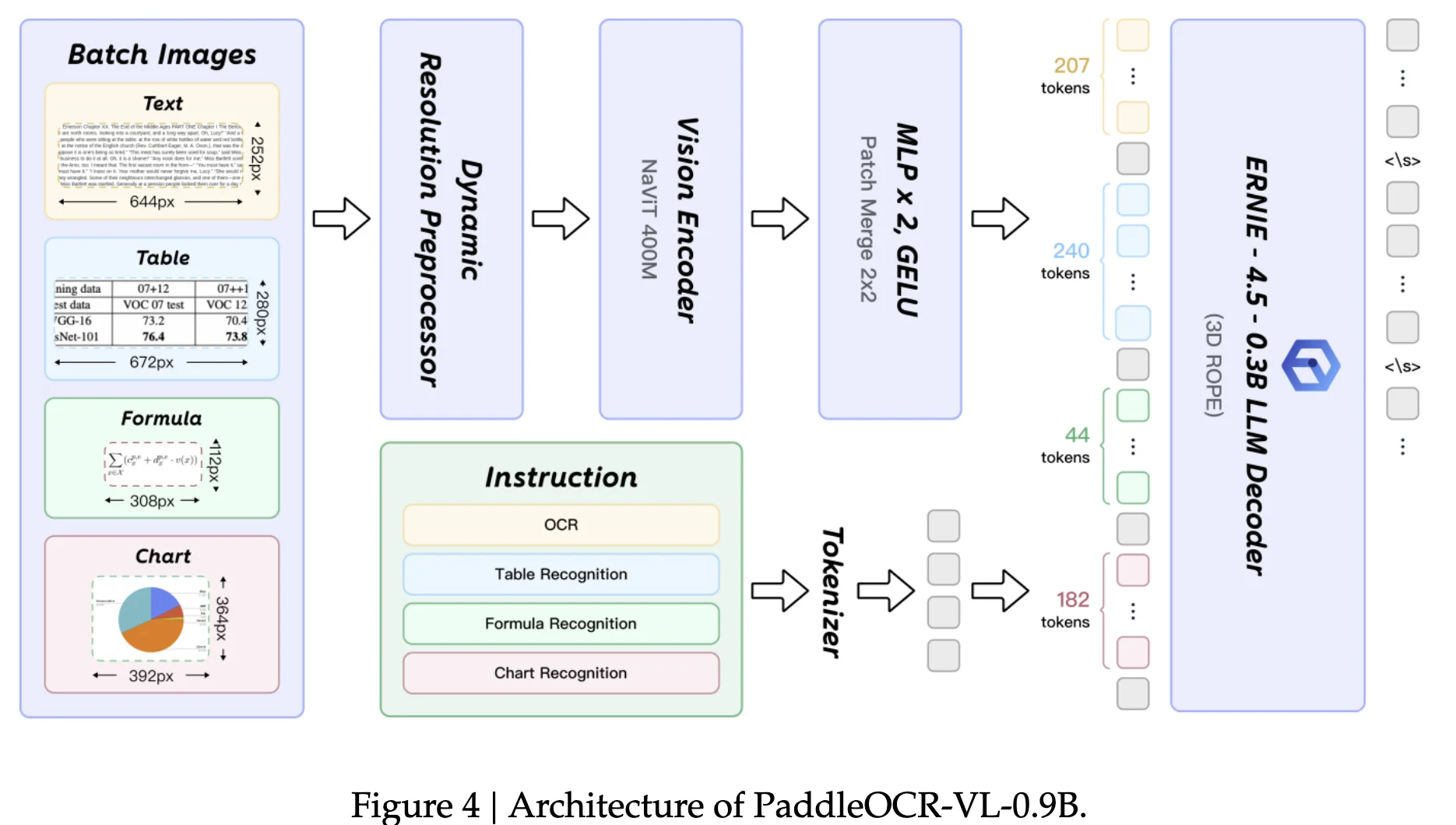

PP-ChatOCRv4 architecture

Pipeline stages (Figure 9 in paper):

- Prompt engineering: User query + optional image input.

- PP-StructureV3 extraction: OCR and document parsing to recover text, layout, and structure.

- Vector retrieval: Index OCR outputs for semantic search.

- PP-DocBee2 (3B VLM): Prompt-based answer extraction from document image regions.

- ERNIE-4.5-300B-A47B (LLM): Reasoning over retrieved text and VLM outputs.

- Result fusion: Combines text-based (OCR + LLM) and image-based (VLM) answers.

Key design choices:

- Dual-stream reasoning: Separate text-based (pipeline OCR $\rightarrow$ vector retrieval $\rightarrow$ LLM) and image-based (VLM) pathways. Fusion merges results to handle cases where OCR fails (e.g., complex tables, handwriting) or VLM hallucinates.

- PP-DocBee2: A 3B VLM fine-tuned for document understanding tasks. The paper does not provide architectural details, training data, or standalone evaluation results for PP-DocBee2.

Data

PP-OCRv5 training data

The report does not specify the size, composition, or sourcing of the PP-OCRv5 training dataset. Key data strategies are described qualitatively:

- Synthetic data generation: Augments training with rendered text images.

- Label refinement using ERNIE 4.5: Corrects noisy labels in existing datasets.

- Multilingual coverage: Training data includes Simplified Chinese, Traditional Chinese, Pinyin, English, and Japanese text.

PP-ChatOCRv4 evaluation benchmark

Size: 638 document images, 1,196 QA pairs.

Document types: Financial reports, research papers, contracts, manuals, regulations, and other unspecified categories.

Metric: Recall@1 (exact match or fuzzy match against ground-truth answers).

Limitations: The benchmark is not publicly released, limiting external validation and reproducibility. The authors do not specify the annotation protocol, inter-annotator agreement, or difficulty distribution of the QA pairs.

OmniDocBench datasets

PP-OCRv5 evaluation: 17 scenarios covering diverse document types, scripts, and layouts. The paper cites OmniDocBench but does not detail the dataset composition or size.

PP-StructureV3 evaluation: English and Chinese document parsing subsets of OmniDocBench. The Edit metric measures character-level edit distance between predicted and ground-truth document structure.

Evaluation

PP-OCRv5 (OmniDocBench OCR)

| Method | 1-EditDist (average) |

|---|---|

| PP-OCRv5 | Rank 1 (value NR) |

| VLM Baseline 1 | NR |

| VLM Baseline 2 | NR |

The report states PP-OCRv5 ranks first on average across 17 scenarios but does not provide absolute 1-EditDist values or the names/sizes of competing VLM baselines. The table in the paper includes parameter counts for baselines but omits numeric results.

PP-StructureV3 (OmniDocBench parsing)

| Method | EN Edit | ZH Edit |

|---|---|---|

| PP-StructureV3 | 0.145 | 0.206 |

| MinerU-1.3.11 | 0.333 | 0.350 |

| Docling-2.14.0 | 0.538 | 0.569 |

Edit distance is computed at the character level between predicted and ground-truth document structure. Lower is better. PP-StructureV3 leads by a substantial margin (2.3$\times$ better than MinerU on EN, 1.7$\times$ on ZH).

PP-ChatOCRv4 (custom KIE/QA benchmark)

| Method | Recall@1 |

|---|---|

| PP-ChatOCRv4 | 85.55% |

| Qwen2.5-VL-72B | 80.26% |

| PP-ChatOCRv3 | 70.08% |

| GPT-4o | 63.47% |

Recall@1 measures whether the system’s top-ranked answer matches the ground-truth (exact or fuzzy match). PP-ChatOCRv4 achieves a +5.29 point gain over Qwen2.5-VL-72B and +22.08 points over GPT-4o.

Limitations: The benchmark is not publicly released. The authors do not ablate the contribution of PP-StructureV3 OCR quality vs. VLM answer extraction vs. LLM reasoning vs. result fusion. It is unclear whether the gains come from superior OCR, better VLM/LLM models, or the fusion strategy.

Hardware / Production

High-performance inference (HPI)

Key features:

- Automatic backend selection: Paddle Inference, OpenVINO, ONNX Runtime, TensorRT. The system selects the optimal backend based on hardware and model architecture.

- Built-in optimizations: Multi-threading, FP16 precision, on-demand ONNX conversion.

- Enabled via API:

enable_hpi=Truein the Python API.

Latency reduction on NVIDIA Tesla T4:

| Model | Latency Reduction |

|---|---|

| PP-OCRv5_mobile_rec | 73.1% |

| PP-OCRv5_mobile_det | 40.4% |

The report does not provide absolute latency values (ms/page) or throughput estimates (pages/s).

Serving options

Basic Serving (FastAPI):

- Lightweight REST API for OCR and document parsing.

- Multi-language client examples (Python, C++, etc.) provided in documentation.

High-Stability Serving (Triton):

- Nvidia Triton Inference Server integration for production deployments.

- Supports dynamic batching, model versioning, and ensemble inference.

On-device deployment:

- Paddle-Lite tooling for Android, iOS, and edge hardware.

- Mobile variants of PP-OCRv5 designed for CPU/resource-constrained environments.

MCP server

Model Context Protocol integration:

- Exposes OCR (PP-OCRv5) and document parsing (PP-StructureV3) as tools for LLM agents.

- Modes: Local (runs models on local hardware), AI Studio (cloud-hosted inference), Self-Hosted (user-managed servers).

- Transports: stdio (standard input/output) and Streamable HTTP (streaming responses).

Example configuration (Local mode, stdio transport):

{

"mcpServers": {

"paddleocr-mcp": {

"command": "python",

"args": ["-m", "paddleocr_mcp.server"],

"env": {

"MODE": "local"

}

}

}

}

Implementation sketch

Python API for PP-StructureV3

from paddlex import create_pipeline

pipeline = create_pipeline(pipeline="PP-StructureV3")

# Single-page inference

output = pipeline.predict("document.pdf")

# Batch inference

for result in pipeline.predict(["doc1.pdf", "doc2.pdf"], batch_size=4):

result.save_to_img("output/")

result.save_to_json("output/")

Python API for PP-ChatOCRv4

from paddlex import create_pipeline

pipeline = create_pipeline(pipeline="PP-ChatOCRv4Doc")

# Build vector index from documents

pipeline.build_vector(data_root="documents/")

# Query the indexed documents

result = pipeline.chat(

key_list=["contract_2023.pdf"],

query="What is the contract termination date?"

)

print(result["result"])

Enabling HPI

pipeline = create_pipeline(

pipeline="PP-OCRv5",

enable_hpi=True # Automatic backend selection + optimizations

)

Notes and open questions

Observations:

- PaddleOCR 3.0 is a comprehensive toolkit (OCR + parsing + KIE/QA + deployment) rather than a single model. The Apache 2.0 license and deployment infrastructure (HPI, serving, on-device, MCP) target production adoption.

- PP-StructureV3 leads on OmniDocBench parsing with substantial margins (2.3$\times$ better than MinerU on EN Edit). This suggests strong layout analysis and structure recovery, though the paper does not ablate the contribution of individual modules (layout detector, table recognizer, reading-order recovery, etc.).

- PP-ChatOCRv4’s +22 point Recall@1 gain over GPT-4o is striking, but the custom benchmark is not publicly released and the ablation is incomplete. It is unclear whether the improvement comes from superior OCR (PP-StructureV3), better VLM/LLM models (PP-DocBee2 + ERNIE-4.5), or the result fusion strategy.

Open questions:

- PP-OCRv5 training data: The report describes data strategies (synthetic generation, label refinement with ERNIE 4.5) but does not specify dataset size, composition, or sourcing. How much training data is required to achieve the reported OmniDocBench performance?

- OmniDocBench OCR results: The paper states PP-OCRv5 ranks first on average but omits numeric 1-EditDist values and baseline names/sizes. External validation on other OCR benchmarks (e.g., olmOCR-Bench, FoxBench) is absent.

- PP-ChatOCRv4 ablations: What is the contribution of each component (PP-StructureV3 OCR vs. PP-DocBee2 VLM vs. ERNIE-4.5 LLM vs. result fusion)? Does the fusion strategy generalize to other VLM/LLM combinations (e.g., GPT-4o + Qwen2.5-VL)?

- Deployment cost and throughput: The HPI latency reductions (73% for mobile recognition on T4) are impressive, but absolute latency (ms/page) and throughput (pages/s) are not reported. Cost-per-page estimates (e.g., olmOCR’s $176/M pages on L40S) are absent, limiting production planning.

- PP-DocBee2 details: The paper does not provide architectural details, training data, or standalone evaluation results for the 3B VLM used in PP-ChatOCRv4. Is PP-DocBee2 a general-purpose VLM or a document-specialized model? Are the weights publicly available?

- Multilingual scaling: PP-OCRv5 supports five languages/scripts (Simplified Chinese, Traditional Chinese, Pinyin, English, Japanese). How would the single multilingual model design scale to dozens of languages (Arabic, Cyrillic, Indic scripts, etc.)? Would capacity constraints require larger models or ensemble architectures?

olmOCR: Unlocking Trillions of Tokens in PDFs with Vision Language Models

Paper: olmOCR: Unlocking Trillions of Tokens in PDFs with Vision Language Models

Code: allenai/olmocr

Demo: olmocr.allenai.org

Model: olmOCR-7B-0825

TL;DR

olmOCR is a PDF-to-text linearization toolkit centered on a 7B vision-language model fine-tuned from Qwen2-VL-7B-Instruct. The system converts PDFs (both born-digital and scanned) into clean plain text suitable for language model development. The core innovation is “document anchoring,” a prompt technique that injects PDF-extracted text blocks with coordinates to reduce reading-order errors and hallucinations. On olmOCR-Bench (1,402 PDFs, 7,010 unit tests), the anchored model achieves 75.5% overall pass rate with an estimated cost of \$176 per million pages on L40S hardware.

What kind of paper is this?

Primarily $\Psi_{\text{Resource}}$, with meaningful $\Psi_{\text{Method}}$ and $\Psi_{\text{Evaluation}}$ components.

- Dominant: $\Psi_{\text{Resource}}$: The headline deliverables are (1) a training dataset (olmOCR-mix-0225, 260k pages), (2) a benchmark suite (olmOCR-Bench with 7,010 unit tests), and (3) a released fine-tuned model with inference code. The paper emphasizes enabling reproducible PDF processing for the research community.

- Secondary: $\Psi_{\text{Method}}$: Document anchoring (layout-aware prompt engineering with extracted text blocks and bounding boxes) is presented as a novel technique for reducing VLM hallucinations in document contexts.

- Secondary: $\Psi_{\text{Evaluation}}$: The benchmark design itself represents a substantial contribution: deterministic unit-test-style pass/fail rules that avoid LLM-as-judge biases.

What is the motivation?

PDFs encode rendering primitives (glyphs with positions) rather than semantic text structure or ground-truth reading order, making them challenging inputs for language model training and document-grounded inference.

- Low-fidelity extraction hurts LM training: Poor OCR quality can degrade training stability and downstream task performance. Cascading errors in document workflows compound the problem.

- Proprietary solutions are expensive: The authors cite GPT-4o API costs exceeding \$6,200 per million pages as a barrier to large-scale PDF processing for open research.

- Existing open tools lack validation: Pipeline systems (Marker, MinerU) and open VLMs have no standardized, reproducible benchmark for comparing PDF linearization quality.

What is the novelty?

Document anchoring: The system extracts text blocks with bounding boxes from the PDF using pypdf, then injects a subset of these blocks (with coordinates and image placeholders) into the VLM prompt alongside the page image. This provides layout hints that help the model maintain correct reading order and reduce hallucinated content.

Silver-data recipe: The authors generate approximately 260k page OCR targets with GPT-4o using structured JSON output, then fine-tune Qwen2-VL-7B-Instruct into olmOCR-7B-0225-preview. The training data is filtered for English-only content and sampled up to 3 pages per PDF.

Unit-test benchmark: olmOCR-Bench uses deterministic binary checks (presence/absence/order/table structure/math formula accuracy) designed to be evaluator-model-independent, explicitly addressing concerns about LLM-judge bias in document evaluation.

What experiments were performed?

olmOCR-Bench evaluation (Table 4): 1,402 PDFs spanning 7,010 unit tests across categories including text presence/absence, natural reading order, table accuracy, and math formula rendering. Baselines include open pipeline tools (Marker v1.7.5, MinerU v1.3.10), proprietary OCR systems (Mistral OCR, GPT-4o, Gemini Flash), and Qwen2-VL variants with and without document anchoring.

Cost analysis (Table 6): Estimates tokens/sec, pages/USD, and cost per million pages for multiple systems under specified hardware assumptions (L40S at \$0.79/hr, H100 at \$2.69/hr), including GPT-4o API vs. batch pricing.

Downstream LM pretraining ablation (Table 5): Continues pretraining OLMo-2-1124-7B for 50B tokens using PDFs linearized by olmOCR vs. Grobid+rules (peS2o baseline), then evaluates on standard LM benchmarks (MMLU, ARC-Challenge, DROP, etc.).

Ablations on anchoring: Compares Qwen2-VL variants and GPT-4o with and without document anchoring prompts to isolate the contribution of layout-aware context.

What are the outcomes/limitations?

Key results:

- olmOCR-Bench overall pass rate: 75.5% (95% CI via bootstrap) for the anchored model, outperforming Marker (70.1%), MinerU (61.5%), Mistral OCR (72.0%), GPT-4o (68.9%), and Qwen2.5-VL (65.5%).

- Cost estimate: \$176 per million pages on L40S hardware (assumes 12% retry rate for JSON parsing failures and degenerate repetition), compared to \$6,240 for GPT-4o batch API and \$596 for MinerU on L40S.

- Downstream pretraining impact: +1.3 percentage points average improvement across benchmark suite when replacing Grobid+rules PDF processing with olmOCR (53.9% $\rightarrow$ 55.2% average score).

Limitations and open questions:

- English-only training data: Explicit filtering via Lingua removes non-English documents, limiting multilingual generalization. The 3-page-per-PDF sampling may bias coverage toward short documents.

- Teacher model inheritance: Fine-tune targets are GPT-4o outputs, so the student model inherits teacher quirks and evaluation aligns to teacher behavior rather than purely human preference.

- Reliability challenges: The paper describes retries for JSON parsing failures and degenerate repetition (mitigated with higher temperature $\tau = 0.8$). The 12% retry rate impacts throughput in production.

- Benchmark generalization: olmOCR-Bench is carefully engineered but remains an in-house suite. The document distribution (55.9% academic papers) may not reflect other production use cases. External validation on held-out benchmarks is not the core claim.

- Long document handling: Sampling only a few pages per PDF during training raises questions about failure modes on documents with complex multi-page structure.

Contrast to olmOCR 2: This initial olmOCR release (February 2025) focuses on GPT-4o distillation with document anchoring prompts, whereas olmOCR 2 (October 2025) shifts to RL-based policy improvements using unit-test rewards from the same benchmark suite. olmOCR 2 reports 81.2% pass rate (vs. 75.5% here) and eliminates structured JSON output in favor of direct plain-text generation, improving robustness and reducing retry overhead.

Model

Base model: Qwen2-VL-7B-Instruct fine-tuned into olmOCR-7B-0225-preview (7B parameters).

Output format: The model generates structured JSON during training (matching GPT-4o’s synthetic target schema), though the inference prompt can be simplified to return “plain text representation” directly. The JSON schema includes fields for primary_language, rotation validity/correction, boolean flags for tables/formulas/diagrams, and natural_text.

Document anchoring representation

The prompt construction pipeline:

- Extract PDF text blocks and image blocks with bounding boxes using

pypdf. - Sample a subset of blocks (preferring start/end of document) to inject into the prompt.

- If the prompt exceeds 8,192 tokens, regenerate with reduced character limits per block.

- Concatenate the page image, selected text blocks (with

RAW_TEXT_START/ENDdelimiters), and generation instructions.

The authors describe this as “layout-aware retrieval” that provides the VLM with content hints and rough ordering cues, reducing hallucinations and reading-order errors.

Data

Training set: olmOCR-mix-0225

Size: 102,825 unique PDFs / 258,641 pages total (Table 1).

Source breakdown:

- Web PDFs: 96,929 documents / 240,940 pages (drawn from internal crawl of over 240 million PDF documents).

- Internet Archive books: 5,896 documents / 17,701 pages.

Filtering and sampling:

- Remove non-English documents (Lingua language detector).

- Filter parsing failures, spam keywords (explicit list), fillable forms, and documents with insufficient extractable text.

- Sample up to 3 pages per PDF.

Document type distribution (Table 2, manual annotation):

- Academic papers: 55.9%

- Brochures: 11.2%

- Legal documents: 10.2%

- Books: 6.8%

- Table-heavy: 5.6%

- Diagram-heavy: 4.7%

- Slideshows: 1.9%

- Other: 3.7%

Synthetic labeling with GPT-4o

The teacher model (GPT-4o) receives prompts with rules including:

- Preserve natural reading order.

- Use LaTeX for equations, Markdown for tables.

- Remove headers/footers when appropriate.

- Handle handwritten annotations if present.

- Do not hallucinate content.

- Output

nullif no text is present.

The structured JSON schema captures language, rotation metadata, content flags, and the final natural_text field.

Algorithms / Training

Fine-tuning configuration

- Effective batch size: 4

- Optimizer: AdamW

- Learning rate: $1 \times 10^{-6}$

- Schedule: Cosine annealing

- Steps: 10,000 (approximately 1.2 epochs over 258k pages)

- Hardware: 8 $\times$ H100 80GB

- Runtime: 16 node-hours

- Context truncation: Training examples truncated to 8,192 tokens; loss masked to final response tokens only.

Inference-time prompt

The production prompt for olmOCR-7B-0225-preview is minimal:

- Page image

- Prior extracted raw text blocks (with

RAW_TEXT_START/ENDdelimiters) - Instruction: “return the plain text representation of this document as if you were reading it naturally”

No explicit JSON schema enforcement at inference (though the model was trained to produce JSON).

Evaluation

olmOCR-Bench construction

Design philosophy: Deterministic unit tests with binary pass/fail outcomes, avoiding LLM-as-judge and fuzzy reference matching. The authors explicitly cite concerns about evaluator bias and non-reproducibility in document benchmarks.

Size: 1,402 PDFs / 7,010 tests.

Test categories (Table 3, selected examples):

- Text presence (TP): Fuzzy string matching to verify key content appears.

- Text absence (TA): Verify headers/footers are removed.

- Natural reading order (NR): Check relative ordering of text segments.

- Table accuracy (TT): Validate neighbor-cell constraints in Markdown/HTML tables (HTML required for rowspan/colspan).

- Math formula accuracy (MF): KaTeX-rendered symbol layout matching.

Document type distribution in benchmark (Table 3):

- arXiv Math (AR): 2,927 formula tests

- Multi-Column (MC): 884 reading-order tests

- Tables (TT): 1,020 table structure tests

- Legal-Tabular-Tiny (LTT): 213 combined tests

- Open Street Map (OSM): 123 specialized tests

- Table Accuracy (TA): (additional table tests, count not specified in draft)

Benchmark results

Overall pass rate (95% CI, Table 4):

| System | Pass Rate |

|---|---|

| Marker v1.7.5 | 70.1% |

| MinerU v1.3.10 | 61.5% |

| Mistral OCR | 72.0% |

| GPT-4o (no anchor) | 68.9% |

| GPT-4o (anchored) | 69.9% |

| Qwen 2.5 VL (no anchor) | 65.5% |

| olmOCR v0.1.75 (anchored) | 75.5% |

Category-wise highlights:

- Anchored olmOCR leads on AR (74.9%), MC (78.3%), and LTT (73.3%).

- Math-heavy OSM and table-heavy TA categories show more competitive performance across baselines.

Cost comparison

Cost per million pages (Table 6, selected systems):

| System | Cost/M pages |

|---|---|

| GPT-4o API | \$12,480 |

| GPT-4o Batch | \$6,240 |

| Marker (H100) | \$1,484 |

| MinerU (L40S) | \$596 |

| Gemini Flash 2 Batch | \$249 |

| olmOCR (L40S) | \$176 |

| olmOCR (H100) | \$178 |

Assumptions: L40S at \$0.79/hr, H100 at \$2.69/hr, 12% retry rate for JSON parsing failures and repetition handling.

Downstream LM pretraining ablation

Setup: Continue pretraining OLMo-2-1124-7B for 50B tokens on PDFs linearized with olmOCR vs. Grobid+rules (peS2o baseline). Evaluate on standard benchmarks.

Results (Table 5):

- Baseline (Grobid+rules) average score: 53.9%

- olmOCR average score: 55.2%

- Improvement: +1.3 percentage points

This suggests OCR/linearization quality can measurably impact LM performance at the 50B-token pretraining scale.

Hardware / Production

Inference pipeline and robustness

Orchestration: The system uses SGLang for serving and chunks work into batches of approximately 500 pages, coordinated through shared cloud storage (S3).

Reliability heuristics:

- Prompt regeneration: If token count exceeds 8,192, reduce character budget for sampled text blocks and rebuild prompt.

- Retry on JSON parse failure: Re-run generation with adjusted parameters.

- Rotation correction: Use PDF metadata to detect and correct page orientation.

- Degenerate repetition mitigation: Retry with higher temperature ($\tau = 0.8$) if the model produces repetitive output; fall back to alternative strategies if retries fail.

The reported 12% retry rate indicates non-trivial overhead in production deployments, though the authors frame this as necessary for quality assurance.

Throughput estimates

The paper provides pages-per-hour and cost-per-page calculations based on measured token throughput on L40S and H100 hardware, factoring in the retry rate. Specific throughput numbers are embedded in the cost estimates (Table 6).

Implementation sketch

# Conceptual pipeline based on paper description

for page in pdf:

blocks = pypdf.extract_text_and_images_with_bboxes(page)

selected = sample_blocks_preferring_doc_start_end(blocks)

prompt = build_prompt(page_image, selected)

if prompt_tokens > 8192:

selected = regenerate_selected_blocks_with_lower_char_budget()

prompt = build_prompt(page_image, selected)

output = vlm.generate(prompt)

if parse_fail_or_repetition(output):

output = retry_with_adjustments(prompt, tau=0.8)

plaintext_pages.append(output)

return concatenate(plaintext_pages)

This matches the described use of pypdf for block extraction, selective sampling, prompt regeneration under token limits, and retry logic for robustness.

Notes and open questions

Observations:

- Document anchoring is essentially layout-aware retrieval: injecting PDF primitives (text + bboxes) into the VLM prompt. The technique is straightforward to implement but depends heavily on PDF parser quality and block selection policy.

- The benchmark design deliberately avoids LLM-judge evaluators, addressing real reproducibility concerns in document evaluation. The unit-test approach is deterministic but may miss nuanced quality issues that human evaluators would catch.

- The downstream pretraining ablation (+1.3 points at 50B tokens) provides evidence that OCR quality matters for LM training, at least in this experimental setup.

Open questions:

- Robustness beyond English academic PDFs: How do the reported gains generalize to document distributions that are not 56% academic papers, and to multilingual documents (given explicit English-only filtering)?

- Anchoring vs. model quality: How much of the Table 4 advantage comes from model weights vs. prompt engineering (anchoring) vs. post-processing heuristics (rotation, retries, truncation)? Ablations on GPT-4o and Qwen show modest anchoring gains (~1 point), but the fine-tuned model may benefit more.

- Long document failure modes: Sampling only 3 pages per PDF during training may leave gaps in handling complex multi-page structures (cross-references, continued tables, section numbering). Production use cases likely encounter these patterns frequently.

- Structured output overhead: The JSON schema adds tokens and introduces parsing failures (12% retry rate). olmOCR 2’s shift to plain-text generation suggests this was a recognized pain point.

NovaChart: A Large-scale Dataset towards Chart Understanding and Generation of Multimodal Large Language Models

Paper: NovaChart: A Large-scale Dataset towards Chart Understanding and Generation of Multimodal Large Language Models (ACM MM ‘24)

Code: Elucidator-V/NovaChart

Data: ympan/novachart

License: MIT (code), Apache-2.0 (dataset)

TL;DR

NovaChart is a chart-focused instruction-tuning dataset designed to improve MLLMs on both chart understanding and chart generation. The authors report 47K high-resolution chart images and 856K instruction-response pairs, spanning 18 chart types and 15 tasks, backed by per-chart metadata including data points, visual elements, source tables, and rendering code.

What kind of paper is this?

Dominant: $\Psi_{\text{Resource}}$

- Headline contribution is a large-scale dataset + tooling for chart instruction tuning, including metadata designed for scalability.

Secondary: $\Psi_{\text{Method}}$ (data engine/pipeline) + $\Psi_{\text{Evaluation}}$ (task suite + metrics + model comparisons)

Rough superposition:

- $\approx 0.6\,\Psi_{\text{Resource}} + 0.25\,\Psi_{\text{Method}} + 0.15\,\Psi_{\text{Evaluation}}$

What is the motivation?

The authors identify three claimed limitations in existing chart datasets used for training chart-capable MLLMs:

Chart type coverage is narrow and imbalanced

- Many datasets focus on bar/line/pie; long-tail types (histograms, radar, word clouds, etc.) are underrepresented.

Task diversity is restricted

- Prior work often centers on extraction/QA/summarization; practical tasks like conditional extraction, visual element recognition, and chart-type conversion are missing or rare.

Scalability is limited by sparse annotations

- Many datasets provide only data points (sometimes colors), but not source tables and visualization code, which blocks easy task/instruction expansion via LLMs.

What is the novelty?

Dataset scale + coverage

47K chart images, 856K instruction-response pairs

18 chart types (explicitly enumerated in the paper’s overview figures/tables):

- Table (as a “chart type”), single/multi-class line plot, single/multi-class scatter plot, single/multi-class bar plot, univariate/bivariate histogram, correlation heatmap, pie, ring, rose, radar, box, sankey, knowledge graph, word cloud.

15 tasks, grouped into 4 capability buckets:

- Chart Data Understanding: data identification, data comparison, conditional data extraction, data referring

- Chart Visual Understanding: color recognition, style detection, chart classification, visual elements identification/retrieval, text extraction

- Chart Summarization and Analysis: chart pattern recognition, chart analysis, chart summarization

- Chart Generation: chart blueprint, chart type conversion, table-to-chart generation

“Scalable metadata” design

For each chart, NovaChart includes four kinds of annotations:

- Data points (numeric/statistical values shown)

- Visual elements (e.g., colors, style choices)

- Source data (the originating sub-table)

- Visualization code (Python rendering code; exception noted for knowledge graphs where code is unavailable)

Data generation engine (pipeline novelty)

They emphasize an end-to-end engine that produces: raw tables → curated subtables + stats → styled chart images + code → instruction-response pairs.

What experiments were performed?

Dataset comparisons

Compares NovaChart against ChartQA, PlotQA, Chart-to-text, SimChart9K, UniChart, MMC, ChartLlama.

Key table claims:

- NovaChart: 18 types, 47K images, 856K instruction pairs, 15 tasks, covering understanding + generation.

- Metadata comparison table shows NovaChart is the only listed dataset providing data points + visual elements + source data + visualization code (others lack source data/code).

Fine-tuning + evaluation

Fine-tune 3 open MLLMs:

- LLaVA-v1.5, InternLM-XComposer, Qwen-VL-Chat

Evaluate on an independent evaluation set spanning all 15 tasks.

Metrics (by task type):

- Exact Match (EM) for classification/QA

- RNSS for numerical results in data-referring tasks

- Levenshtein distance + SCRM for multi-point extraction

- GPT-Score for open-ended summarization/analysis and chart generation tasks

Reported outcomes

Across tasks, fine-tuning yields large relative improvements reported as 35.47%–619.47% (range across tasks/models).

Qualitative examples show improvements in:

- Correctly interpreting axes/distributions for analysis

- Producing executable chart conversion code (baseline sometimes outputs “not directly executable”).

What are the outcomes/limitations?

Outcomes the paper emphasizes

- The authors report gains across all 15 tasks after tuning, including generation tasks. Relative improvement ranges are reported as 35.47% to 619.47%, though baseline performance levels vary significantly by task.

- Chart classification and text extraction reportedly reach “excellent” performance in their evaluation setup.

- Improvements are claimed across chart types beyond common bar/line/pie charts, attributed to broader type coverage in the training data. Per-type breakdowns are not provided.

Limitations / open questions

Dataset design and coverage:

- Chart type distribution is not reported; balance across the 18 claimed types is unclear, which may affect model generalization.

- Knowledge graph charts explicitly lack visualization code, breaking the “full metadata” claim for at least one chart type.

- Source data for the 47K charts is not specified; provenance, diversity, and potential biases are unclear.

Evaluation concerns:

- Open-ended task evaluation relies on GPT-Score (LLM judge), introducing model-judge dependence and sensitivity to prompt formulation. No inter-rater reliability or correlation with human judgment is reported.

- The paper acknowledges improvements are “limited” for certain analysis and generation tasks, suggesting the dataset may not sufficiently address those capabilities.

- Accuracy drop magnitude when moving from extracted tables to predicted tables is not quantified for the fine-tuned models.

Reproducibility gaps:

- Critical hyperparameters (fine-tuning learning rates, batch sizes, epochs) are deferred to appendices not included in the provided excerpt.

- Chart-type-specific attribute rules and full metric definitions are also appendix-only.

- Training compute (GPU hours, hardware specs) is not reported.

Reproducibility Details

Model

NovaChart itself is a dataset, but the paper fine-tunes 3 MLLMs: LLaVA-v1.5, InternLM-XComposer, Qwen-VL-Chat. Hyperparameters and fine-tuning details are stated as being in Appendix 2 (not included in the visible content).

Data

Dataset size and structure:

- 47K chart images (high-resolution)

- 856K instruction-response pairs

- Built from:

- 1.3K raw tables (post-filtering)

- 28K curated sub-tables (“source data”) used to derive chart statistics

Chart types (18):

From the overview and distribution figure, the chart types include:

- Table

- Single-class and multi-class: line plot, scatter plot, bar plot

- Univariate and bivariate histogram

- Correlation heatmap

- Pie, ring, rose, radar

- Box plot

- Sankey

- Knowledge graph

- Word cloud

Tasks (15):

Grouped as:

- Chart Data Understanding: data identification; data comparison; conditional extraction; data referring

- Chart Visual Understanding: color recognition; style detection; chart classification; visual elements identification/retrieval; text extraction

- Chart Summarization and Analysis: pattern recognition; analysis; summarization

- Chart Generation: blueprint; chart type conversion; table-to-chart generation

Metadata fields (per chart):

The paper’s “chart metadata” is explicitly:

- Data points

- Visual elements

- Source data (sub-table)

- Visualization code (rendering code)

Algorithms / Training (Data Engine)

The paper describes a 4-stage pipeline:

1. Raw Data Acquisition

- Source: Kaggle relational tables with high user votes.

- Filtering/preprocess:

- Remove non-English tables

- Remove tables with too few rows (

< 50) - Remove unnamed columns

- Remove columns with too many missing values (

> 90%) - Remove columns with overly long contents (example: movie reviews)

2. Data Curation

- Goal: sample attributes + rows to produce many sub-tables and then compute chart statistics.

- Attribute type schema (used for choosing columns):

- Numeric: numeric dependent variables (e.g., y-axis)

- Unique-Numeric: numeric, mostly unique (e.g., year), usable as independent variable (x-axis for lines)

- Categorical: string with

<= 5unique values (class labels for multi-class charts) - Enumerable:

<= 25unique values (qualitative variable for bar/pie, etc.)

- Attribute classification model:

- Uses GPT-turbo-3.5 with in-context learning; prompt includes the attribute-type definitions, number of unique values, example values, and an instruction to classify the attribute.

- Sub-table sampling:

- For each chart type: randomly select the required attribute types and sample 30–50 rows from the raw table

- If a raw table cannot yield a sub-table meeting requirements, skip it

- Compute chart statistics from the sampled sub-table to derive chart data points

3. Image Styling and Visualization

- Rendering libraries mentioned: Matplotlib, Seaborn, Pyecharts.

- Visual diversity: randomize visual elements (example: colors, shadow, style) and render multiple images per data-point instance.

- The paper states this stage yields ~40K charts initially, then expanded to 47K after later extensions.

4. Instruction Formulation

- Task design: 15 tasks, including “new” ones the authors emphasize (conditional extraction; some visual element checks like fitting-curve existence in histograms; chart-to-chart conversions).

- LLM usage:

- For tasks with answers obtainable from metadata: use GPT-4 to generate diverse instruction templates and convert to instruction-following format.

- For more open-ended tasks (summarization/analysis): use GPT-turbo-3.5, providing prompt with task instruction, in-context demos, and the relevant chart metadata; the model output becomes the response.

Data expansion steps:

- Add line-chart source data from Statista due to shortage of suitable line-chart subtables from Kaggle.

- Add HTML/CSS-rendered tables as an additional “chart” type.

- Manually collect some chart types (explicitly mentioned: knowledge graphs and word clouds); knowledge-graph visualization code unavailable.

Evaluation

Metrics and protocols:

- EM for classification/QA tasks, RNSS for numeric data-referring, Levenshtein + SCRM for multi-point extraction, GPT-Score for open-ended and generation tasks.

- Comparisons shown in figures:

- Per-task spider/radar charts before vs after tuning for the three models

- Per-chart-type performance plots on selected tasks (example shown for InternLM-XComposer).

Reported improvement magnitude:

- Across tasks: 35.47%–619.47% relative improvements after fine-tuning (paper-reported range).

Hardware / Production

The main text does not list GPU types, wall-clock training time, or serving throughput; it points to appendices for fine-tuning details.

Data Availability

Is all the data publicly shared?

They claim the dataset is publicly available, and they point to a public GitHub repo as the distribution entry point.

That said, “all the data” depends on what you mean:

Released (public):

- GitHub repo (code + toolkit; also points to where to download the dataset): Elucidator-V/NovaChart

- Hugging Face dataset linked from the GitHub repo as the download location for the “full NovaChart dataset”: ympan/novachart

Potentially not fully released / ambiguous:

- The paper emphasizes rich chart metadata (data points, visual elements, source data, visualization code).

- But a public GitHub issue reports that only the instruction-tuning JSONL files were found and asks whether metadata will be released, suggesting at least some parts may be missing or not obvious in the current release.

Underlying raw sources are likely not fully redistributable as-is:

- Their pipeline uses Kaggle tables and additionally Statista tables for line charts.

- The paper doesn’t specify whether the original Kaggle/Statista tables are redistributed (and those sources typically have their own licenses/ToS).

Where is it shared?

- GitHub: Elucidator-V/NovaChart (paper and repo both point here)

- Hugging Face (dataset download): ympan/novachart — contains a ~10 GB

novachartv1.rarfile

What license?

- GitHub repository license: marked as MIT on the repo page (this generally covers the code and repo contents, unless the repo says otherwise)

- Hugging Face dataset license: listed as Apache-2.0 on the dataset page/README metadata

- Paper itself: does not appear to state a dataset license in the main text; it only states availability and links

GOT-OCR2.0: Unified End-to-End OCR with General Optical Character Theory

GOT-OCR2.0 — Notes

TL;DR

GOT-OCR2.0 is a unified 580M parameter encoder-decoder OCR model that treats diverse optical signals (plain text, formulas, tables, charts, sheet music, geometric shapes) as a single “character” space. The system introduces interactive region OCR via box/color prompts, dynamic resolution through multi-crop tiling, and multi-page OCR via decoder post-training. On the Fox benchmark for dense document OCR, GOT achieves edit distance 0.035 (EN) and 0.038 (ZH) with F1 scores of 0.972 and 0.980 respectively. The model supports only English and Chinese with high quality.

What kind of paper is this?

Dominant: $\Psi_{\text{Method}}$ (new unified model architecture + three-stage training recipe + task unification framework).

Secondary: $\Psi_{\text{Resource}}$ (extensive synthetic data engines for formulas, molecules, tables, sheet music, geometry, and charts; open-source code and model release).

The paper introduces “General OCR Theory” (OCR-2.0) as a conceptual framework for treating diverse optical signals as characters, with a simple encoder-decoder architecture trained via multi-stage optimization. The resource component is substantial: the authors describe detailed synthetic data engines totaling $>$10M image-text pairs across stages, with open-source code and Apache 2.0 model weights provided.

What is the motivation?

- OCR-1.0 pipeline brittleness and cost: Traditional multi-module systems (detection/cropping/recognition) are prone to local optima, cascading errors, and high maintenance overhead.

- Task fragmentation: Different specialized models exist for text detection, scene OCR, formula recognition, table extraction, etc. Practitioners must choose among many models for different subtasks, increasing deployment complexity.

- Broadened “OCR” demand: The authors frame the target as “intelligent processing of man-made optical signals” beyond plain text—including formulas, tables, charts, sheet music, molecular structures, and geometric diagrams.

- Limited multilingual support in end-to-end systems: Prior unified OCR models often focus narrowly on English or require separate models for different languages.

What is the novelty?

General OCR Theory (“OCR-2.0”) framing: Treats diverse optical signals (plain text, mathematical notation, tables, charts, sheet music, molecular structures, geometric shapes) as “characters” in a unified space, aiming for a single end-to-end model that handles multiple OCR tasks.

Simple encoder-decoder OCR architecture: Vision encoder (VitDet base with local attention, $\sim$80M params) + linear connector + language decoder (Qwen-0.5B, total 580M params). The encoder compresses $1024 \times 1024$ images to $256 \times 1024$ tokens, with the decoder supporting up to 8K context length.

Feature extensions via decoder post-training: Fine-grained region OCR (box and color prompts), dynamic resolution (multi-crop sliding window with max 12 tiles following InternVL-1.5), and multi-page OCR are added in Stage 3 without modifying the vision encoder. This preserves encoder pretraining while expanding capabilities.

Staged training strategy with data mixing: Three-stage pipeline (encoder pretrain with tiny OPT-125M, joint training with Qwen-0.5B on formatted/general OCR, decoder post-train for features) with 80% mixing of previous stage data to reduce regression.

Extensive synthetic data engines: Stage 1 uses 5M pure text pairs from LAION/Wukong/PDFs; Stage 2 adds 1M formulas (arXiv LaTeX $\rightarrow$ Mathpix format, $>$20$\times$ faster rendering), 1M molecules (ChEMBL SMILES), 0.3M tables, 1.2M full-page formatted docs, 0.5M sheet music (GrandStaff + Verovio), 1M geometry (TikZ), and 2M charts (Matplotlib/Pyecharts); Stage 3 constructs 60w fine-grained, 50w multi-crop, and 20w multi-page samples.

What experiments were performed?

The authors evaluate across five OCR task families:

1. Plain document OCR: Fox benchmark (dense multi-page documents); word-level segmentation; metrics include edit distance, F1, precision, recall, BLEU, METEOR. Compares GOT (580M) against larger LVLMs.

2. Scene text OCR: 400 natural scene images (200 English, 200 Chinese) with manually corrected ground truth; character-level segmentation.

3. Formatted document OCR: 90 pages (English + Chinese) with Mathpix pseudo-labels manually corrected; evaluates single-scale vs multi-crop inference on formula and table metrics.

4. Fine-grained OCR: Box-guided and color-guided referential OCR evaluation in English and Chinese; compares GOT to Fox on region extraction accuracy.

5. General OCR: Chart OCR using ChartQA (structure-extraction version) and PlotQA benchmarks; reports AP@strict/slight/high for chart structure accuracy.

Test data filtering: The authors apply “strict text similarity filtering” to reduce overlap between training and test text, though details are not provided.

What are the outcomes/limitations?

Outcomes

Plain document OCR (Fox benchmark):

| Model | Lang | Edit Dist $\downarrow$ | F1 $\uparrow$ | Precision $\uparrow$ | Recall $\uparrow$ | BLEU $\uparrow$ | METEOR $\uparrow$ |

|---|---|---|---|---|---|---|---|

| GOT | EN | 0.035 | 0.972 | 0.971 | 0.973 | 0.947 | 0.958 |

| GOT | ZH | 0.038 | 0.980 | 0.982 | 0.978 | 0.878 | 0.939 |

GOT achieves very strong dense document OCR metrics. The paper reports GOT ranks favorably against larger LVLMs in their comparison table, though specific baseline names and parameter sizes are included in the paper but numeric results are only provided for GOT.

Scene OCR (400 images):

| Model | Lang | Edit Dist $\downarrow$ | F1 $\uparrow$ | Precision $\uparrow$ | Recall $\uparrow$ | BLEU $\uparrow$ | METEOR $\uparrow$ |

|---|---|---|---|---|---|---|---|

| GOT | EN | 0.112 | 0.926 | 0.934 | 0.927 | 0.676 | 0.896 |

| GOT | ZH | 0.096 | 0.928 | 0.914 | 0.954 | 0.641 | 0.928 |

Scene OCR edit distance is higher than document OCR (0.112 EN vs 0.035), reflecting the increased difficulty of natural scene text with perspective distortion, occlusion, and varied fonts.

Formatted document OCR (90 pages, single vs multi-crop):

| Setup | Formula F1 $\uparrow$ | Table METEOR $\uparrow$ |

|---|---|---|

| Single-scale | 0.749 | 0.760 |

| Multi-crop | 0.865 | 0.811 |

Multi-crop inference provides substantial gains for formula recognition (+11.6 F1 points) and table extraction (+5.1 METEOR points), demonstrating the effectiveness of dynamic resolution for high-detail regions.

Chart OCR:

| Dataset | AP@strict $\uparrow$ | AP@slight $\uparrow$ | AP@high $\uparrow$ |

|---|---|---|---|

| ChartQA-SE | 0.747 | 0.845 | 0.867 |

| PlotQA-SE | 0.133 | 0.596 | 0.640 |

GOT shows stronger performance on ChartQA (0.747 strict AP) compared to PlotQA (0.133 strict AP), suggesting better generalization to the ChartQA structure extraction task.

Fine-grained OCR: The authors report GOT outperforms Fox on both box-guided and color-guided referential OCR in English and Chinese (Table 4 in paper), though specific numeric metrics are not transcribed in the rough draft.

Limitations and open questions

Language coverage: The authors explicitly state they “mainly support English and Chinese” and “cannot guarantee OCR quality for other languages” even if some appear in crawled PDFs. Scaling to dozens of languages (Arabic, Cyrillic, Indic scripts, etc.) is not addressed. The single multilingual model design may face capacity constraints beyond 2-3 languages.

Geometry scope: The authors describe geometry rendering as “preliminary” and state the model “can only recognize basic geometry at present.” Complex geometric diagrams, 3D figures, and advanced TikZ constructs are likely beyond current capabilities.

Ablation gaps: The paper does not ablate the contribution of individual components:

- How much gain comes from VitDet local attention vs other encoders?

- What is the impact of the 80% data mixing strategy vs training on new data only?

- How much do synthetic data engines contribute vs real data?

No throughput or latency data: Training hardware is reported (64 L40s), but the paper omits inference latency (ms/page), throughput (pages/s), and cost-per-page estimates. This limits comparison to olmOCR ($176/M pages on L40S) or MinerU2.5 (1.224 pages/s on A100).

Test set construction and leakage: The authors mention “strict text similarity filtering” to reduce train/test overlap but do not specify the threshold, filtering method, or amount of data removed. External validation on established benchmarks beyond Fox and ChartQA is limited.

Multi-page OCR evaluation: The paper describes 20w multi-page training samples (2-8 pages each, <8K tokens total) but does not evaluate multi-page OCR performance separately. It is unclear how well page-breaking and cross-page references are handled compared to single-page inference.

Comparison baseline details: The paper compares GOT to “larger LVLMs” on Fox but omits model names, parameter sizes, and numeric results for baselines in the extracted text. This limits reproducibility of the comparison.

Model

Architecture

High-level design: Vision encoder + linear connector + language decoder.

Vision encoder:

- Backbone: VitDet (base variant), chosen for local attention mechanism to reduce compute on high-resolution images.

- Parameters: $\sim$80M.

- Tokenization: Final layers compress $1024 \times 1024 \times 3$ input image to $256 \times 1024$ image tokens.

- Projection: Linear layer ($1024 \times 768$) projects image tokens into language model dimension during encoder pretraining (described in Stage 1).

Language decoder:

- Stage 1 (encoder pretrain): OPT-125M serves as a “tiny decoder” to efficiently pass gradients to the encoder.

- Stage 2-3 (main model): Qwen-0.5B replaces OPT-125M for broader OCR-2.0 training.

- Context length: Supports up to 8K tokens (increased from 4K in Stage 1 to 6K in Stage 2 to 8K in Stage 3).

Total parameters: 580M (encoder ~80M + decoder ~500M).

Supported inputs and outputs

Inputs:

- Scene and document images (single-page or multi-page).

- Fine-grained region specification via bounding box coordinates (normalized $\times 1000$) or color-coded frames (red/green/blue).

- Multi-crop tiling for ultra-high-resolution documents (max 12 tiles, $1024 \times 1024$ per tile, InternVL-1.5 cropping strategy).

Outputs:

- Plain text: Character sequences for scene and document OCR.

- Formatted outputs: Mathpix-markdown (formulas, tables), TikZ (geometry), SMILES (molecules), custom notation (sheet music, charts) via prompting.

Aspect ratio handling: Input images of various shapes are resized to $1024 \times 1024$ via a “compromise” strategy (details not specified in paper).

Design rationale

VitDet local attention: Reduces computational cost on high-resolution images compared to global attention mechanisms. The authors note this is critical for $1024 \times 1024$ inputs.

High compression ratio: $1024 \times 1024$ image $\rightarrow$ $256 \times 1024$ tokens represents $\sim$16$\times$ spatial compression (256 tokens for $1024^2$ pixels), enabling efficient processing of dense document pages.

Decoder post-training for features: Stage 3 freezes the vision encoder and only trains the decoder on fine-grained, multi-crop, and multi-page data. This design preserves encoder pretraining while adding capabilities, reducing compute cost vs end-to-end retraining.

Contrast to olmOCR2: GOT uses VitDet with local attention for high-resolution processing, while olmOCR2 employs SigLIP with global attention and dynamic tiling. GOT compresses to 256 tokens per image vs olmOCR2’s variable token count (up to 1984 tokens for high-detail regions).